Hyperparameter tuning is the process of optimizing the parameters that guide a machine learning model’s learning process. It enhances model performance and accuracy.

Hyperparameter tuning is crucial for refining machine learning models. By adjusting these parameters, you can significantly improve model performance. Common methods include grid search, random search, and Bayesian optimization. Each method has its own advantages and is suitable for different types of problems.

Grid search explores all possible combinations, while random search samples random combinations. Bayesian optimization uses past evaluations to choose the next set of hyperparameters, making it more efficient. Understanding and implementing hyperparameter tuning can lead to better, more accurate models, which are essential for achieving optimal results in machine learning projects.

The Essence Of Hyperparameter Tuning

Hyperparameters are settings in a machine learning model. They are not learned from data. Instead, they are set before training the model. Examples include learning rate and batch size. Choosing the right hyperparameters is crucial. It can affect model performance significantly.

Tuning helps find the best hyperparameters. It makes the model perform better on unseen data. There are different methods for tuning. Grid search and random search are common techniques. Automated tools can help in this process. Good tuning can reduce errors and improve accuracy.

Credit: www.analyticsvidhya.com

Types Of Hyperparameters

Model-specific parameters change the behavior of the model. Examples include the number of layers in a neural network. Another example is the learning rate for gradient descent. These parameters need tuning for best performance. Often, these are set based on experiments.

Training process parameters affect how the model learns. Examples are batch size and number of epochs. Batch size determines how many samples are processed at once. Number of epochs indicates how many times the model sees the data. Choosing the right values is crucial for model accuracy.

Key Techniques In Hyperparameter Tuning

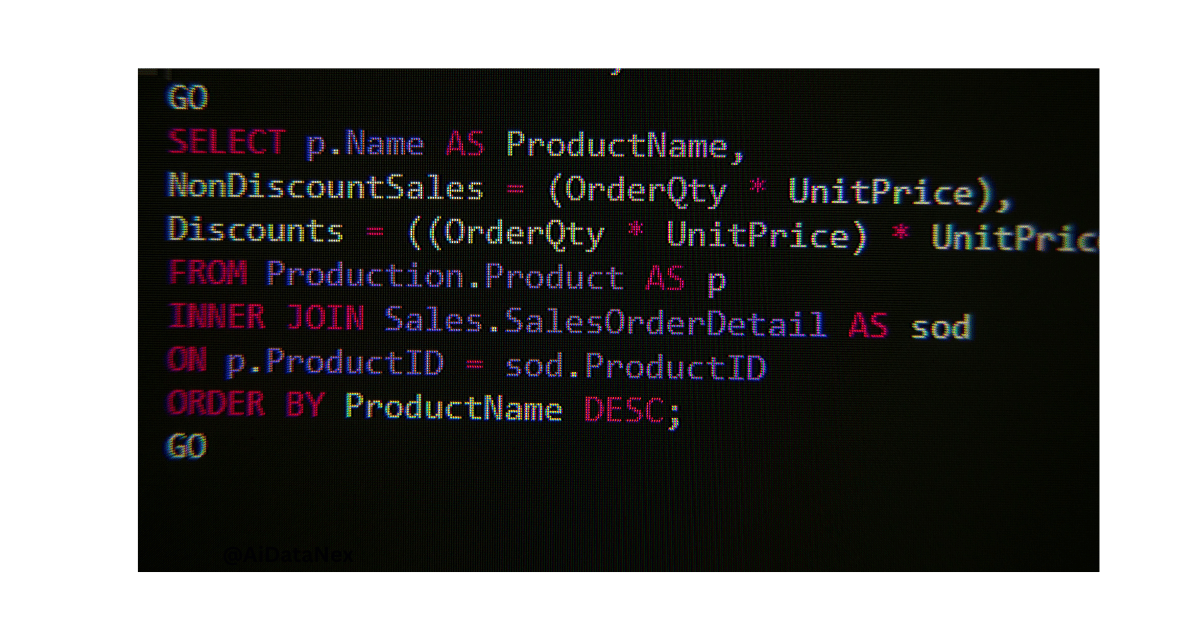

Grid Search is a simple way to find the best hyperparameters. It works by testing all possible combinations of parameters. This can be time-consuming, but it is thorough. Grid Search is often used in machine learning to improve model performance. Each combination is evaluated, and the best one is chosen. This method guarantees finding the optimal set, but it can be slow.

Random Search picks random combinations of parameters to test. This method is faster than Grid Search. It does not test every possible combination, saving time. Random Search can find good solutions quickly. It is useful when you have limited time or resources. Random Search is often used in deep learning tasks.

Bayesian Optimization is a smart way to find the best parameters. It uses past results to choose the next set of parameters. This makes it more efficient than Grid and Random Search. Bayesian Optimization often finds the best solution faster. It is popular in both machine learning and deep learning. This method balances exploration and exploitation, ensuring good results.

Credit: www.labellerr.com

Automated Hyperparameter Tuning Tools

Several automated hyperparameter tuning tools are popular among data scientists. Grid Search and Random Search are traditional methods. Bayesian Optimization offers a more sophisticated approach. HyperOpt and Optuna are newer tools with advanced features. Google’s AutoML and Microsoft’s NNI provide cloud-based solutions. These tools help save time and effort.

Automation in hyperparameter tuning offers several advantages and disadvantages. The pros include saving time and reducing manual work. Automated tools can handle large datasets efficiently. They can also find optimal parameters more quickly. On the other hand, the cons include potential overfitting. Automated tools may not always understand the context of the data. They can also be costly, especially cloud-based solutions.

Evaluating Tuning Effectiveness

Accuracy measures how often the model is right. Precision checks how many selected items are relevant. Recall tests how many relevant items are selected. F1 Score combines precision and recall into one metric. AUC-ROC shows the performance of the model at all classification thresholds. Log Loss checks how close predictions are to actual labels.

K-Fold Cross-Validation splits data into K parts. The model trains K times, using one part as test data each time. Stratified K-Fold ensures each fold has the same class ratio. Leave-One-Out Cross-Validation (LOOCV) uses one data point as test data and the rest as training data. Time Series Split is for time-dependent data. It ensures the training data always comes before test data. Nested Cross-Validation is useful for model selection and hyperparameter tuning.

Challenges In Hyperparameter Tuning

Overfitting happens when a model learns the noise in the training data. It becomes too complex and performs poorly on new data. To avoid overfitting, use cross-validation techniques. This helps to get a better estimate of model performance. Regularization methods like L1 or L2 can also help. They add a penalty for larger coefficients, making the model simpler.

High-dimensional spaces have many features. This makes hyperparameter tuning hard. Dimensionality reduction techniques like PCA can help. They reduce the number of features while keeping important information. Grid search and random search methods can be effective. They explore different hyperparameter combinations. Using these methods, you can find the best hyperparameters for your model.

Best Practices For Efficient Tuning

Begin with simple models. Simple models are easier to tune. Grid search is a good starting point. Try different values for each parameter. Record the results. Use those results to narrow down the best values. Random search can also be effective. It explores more combinations. Always start with a small dataset. This saves time and resources.

Start with a broad range of parameters. Gradually narrow down the range. First, identify the most important parameters. Tune one parameter at a time. Keep other parameters constant. Learning rate is often crucial. Adjust it in small steps. Batch size can also impact performance. Test different sizes to find the best one. Use cross-validation to evaluate the model. This ensures reliable results.

Credit: towardsdatascience.com

Advanced Topics In Hyperparameter Tuning

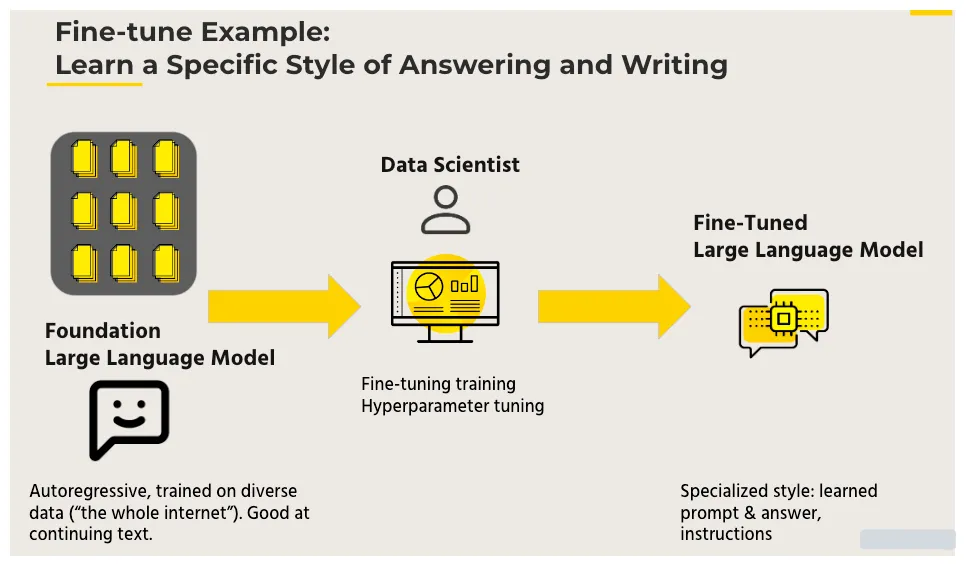

Transfer learning can help improve model performance. It uses knowledge from one task to boost another. This method saves time and resources. Pre-trained models need fewer hyperparameter adjustments. They are already optimized for similar tasks. Transfer learning is useful in image and text analysis. It reduces the need for large datasets. This makes it popular in many industries.

Meta-learning focuses on learning how to learn. It can improve hyperparameter tuning. This technique helps models adapt quickly. They perform well on new tasks. Meta-learning uses past experiences to guide the tuning process. This can save a lot of time. It also increases efficiency. Meta-learning is useful in dynamic environments. It helps models stay relevant and accurate.

Case Studies

Explore the intricacies of hyperparameter tuning through detailed case studies. Discover effective strategies for optimizing machine learning models, ensuring peak performance. Learn from real-world examples to fine-tune your algorithms with precision.

Success Stories In Deep Learning

Google used hyperparameter tuning to improve its speech recognition system. They achieved a significant boost in accuracy. DeepMind fine-tuned parameters to enhance its AlphaGo performance. This helped AlphaGo defeat human champions. OpenAI also employed tuning for its GPT models. This resulted in more coherent and relevant outputs.

Real-world Tuning Scenarios And Outcomes

In healthcare, tuning helped in identifying diseases from medical images. This led to better diagnosis and patient care. Financial firms used tuning in trading algorithms. This increased profit margins by predicting market trends accurately. E-commerce platforms optimized recommendation systems. This improved user experience and sales.

Frequently Asked Questions

What Are The Steps Of Hyperparameter Tuning?

1. Choose a model. 2. Select hyperparameters to tune. 3. Define a search method (grid search, random search, etc. ). 4. Split data into training and validation sets. 5. Evaluate model performance and adjust hyperparameters accordingly.

What Is The Best Method For Hyperparameter Tuning?

The best method for hyperparameter tuning is Bayesian Optimization. It efficiently searches the parameter space and finds optimal values. This approach balances exploration and exploitation, improving model performance.

Is Hyperparameter Tuning Necessary?

Hyperparameter tuning is essential for improving model performance. It helps in finding the best parameters for accuracy. Ignoring it can lead to suboptimal results. Efficient tuning can significantly enhance predictive power.

Is Hyperparameter Tuning Hard?

Yes, hyperparameter tuning can be challenging. It requires time, expertise, and experimentation to find optimal values.

Conclusion

Mastering hyperparameter tuning can significantly enhance your machine learning models. It improves performance and ensures optimal results. Experiment with different techniques and tools. Stay updated with the latest advancements. With practice, you’ll become proficient in fine-tuning hyperparameters. This will lead to more accurate and reliable predictions.

Happy tuning!