PyTorch is an open-source deep learning framework developed by Facebook’s AI Research lab. It is widely used for data science and machine learning.

PyTorch offers a flexible and efficient platform for building neural networks. Its dynamic computational graph allows for easy experimentation and debugging. Data scientists and researchers prefer PyTorch for its intuitive interface and extensive libraries. The framework supports various tasks, from natural language processing to computer vision.

PyTorch’s strong community and comprehensive documentation make it accessible for beginners and experts. Its seamless integration with Python enhances productivity and simplifies workflow. PyTorch empowers data scientists to bring innovative solutions to complex problems, making it a cornerstone tool in the data science field.

Introduction To Pytorch

PyTorch is an open-source machine learning library. It is based on the Torch library. PyTorch is widely used in data science. It helps in building deep learning models. This tool is very popular among researchers. It is known for its flexibility and speed. Many companies use PyTorch for their AI projects.

PyTorch is a favorite in the data science community. It supports dynamic computation graphs. This feature makes it easy to debug. PyTorch also integrates well with Python. Many data scientists prefer it over other libraries. It is also great for natural language processing tasks. PyTorch has a strong community and many tutorials.

Getting Started With Pytorch

First, install PyTorch. Use the command: pip install torch. Make sure Python is installed. Check Python version using python --version. Verify PyTorch installation by running import torch in Python. If no errors, installation was successful.

PyTorch is great for tensors. Tensors are like arrays. Create a tensor with torch.tensor(). Perform basic operations like addition and multiplication. For example, torch.add() adds two tensors. Use torch.mul() to multiply them. Here is a simple example:

| Operation | Code |

|---|---|

| Addition | torch.add(tensor1, tensor2) |

| Multiplication | torch.mul(tensor1, tensor2) |

Manipulate tensors easily. Reshape tensors with tensor.view(). Convert tensors to NumPy arrays using tensor.numpy(). PyTorch is very flexible. Experiment with different operations.

Tensors: The Building Blocks

Tensors are like arrays. They store data. They can hold numbers or text. Tensors are used in many areas of data science. They help in machine learning and deep learning. You can think of them as building blocks. They help create complex models. Tensors come in different shapes and sizes. Some are one-dimensional. Others are two-dimensional or more.

You can do many operations with tensors. Addition and multiplication are common. You can also reshape tensors. Reshaping helps in changing their dimensions. Slicing is another operation. It allows you to get a part of a tensor. Transposing changes the order of dimensions. These operations make tensors very flexible.

Autograd: Automatic Differentiation

The Autograd system in PyTorch is very powerful. It helps you compute gradients automatically. This is useful for training neural networks. Autograd uses a technique called reverse-mode automatic differentiation. It records operations as you perform them. Then, it plays them backward to compute gradients. This is very efficient for deep learning.

Gradient descent is a method to find the minimum of a function. In PyTorch, you can implement it easily. First, define your model and loss function. Then, compute the gradients of the loss. Update the model parameters using these gradients. Repeat this process many times. Your model will learn and improve.

Building And Training Neural Networks

Neural networks are like brain cells. They have layers, nodes, and connections. Each layer has many nodes. These nodes are like neurons. The connections between nodes are called weights. The first layer is the input layer. It takes data in. The last layer is the output layer. It gives the result. Layers in between are hidden layers. They process the data. More layers mean more complex models. You can define these layers in Pytorch. Use the torch.nn module. It makes defining layers easy.

Loss functions measure errors in models. They tell how far predictions are from true values. Common loss functions are Mean Squared Error and Cross-Entropy Loss. Optimizers adjust weights to reduce loss. They improve model accuracy. Popular optimizers are SGD and Adam. In Pytorch, use the torch.optim module. It provides many optimizers. Choose the right loss function and optimizer. It helps in training better models.

Credit: www.amazon.com

Data Loading And Preprocessing

Pytorch has DataLoader and Dataset classes. These classes make it easy to load and preprocess data. The Dataset class lets you create a dataset object. This object can hold your data. The DataLoader class helps you load the dataset in batches. This makes your code run faster. It also helps with memory management.

Data augmentation helps improve your model. It creates new data by modifying existing data. Techniques include flipping, rotating, and cropping images. You can also change the brightness or contrast. These techniques help the model learn better. They make the model more robust.

Advanced Pytorch Features

PyTorch lets you create custom modules easily. These modules can be used like Lego blocks. Functions in PyTorch are also customizable. This makes your code very flexible and reusable. You can define your own layers and operations. This helps in building complex models with ease. Custom modules and functions boost your productivity. They also make your code neat and organized.

PyTorch supports parallel computing. This means you can use many CPUs at once. Distributed computing is also possible with PyTorch. You can train models on different machines. This speeds up the training process. It is very useful for large datasets. Parallel and distributed computing help in making your tasks faster and efficient. They allow you to handle more data and bigger models.

Pytorch In Practice

Many companies use PyTorch for their projects. Facebook uses it for image recognition. Uber depends on it for self-driving cars. Researchers love PyTorch for its flexibility. It helps in quick experiments. PyTorch is also popular in academia. It allows for easy prototyping.

Always use batch processing. It makes training faster. Data augmentation can improve model performance. Regularly validate your model on test data. This helps in early detection of issues. Make use of pre-trained models. They save time and resources. Optimize your code for better performance. Use GPU whenever possible. It speeds up the training process.

Debugging And Optimization

Errors in PyTorch can be tricky. Check your tensor shapes first. Mismatched shapes cause many problems. Inspect data types to avoid type errors. Use `print()` to display tensor values. This helps find unexpected values. Gradients can vanish or explode. Use gradient clipping to fix this. Memory leaks slow down your code. Use `torch.no_grad()` to save memory. Device mismatch is another issue. Ensure tensors are on the same device.

Optimize your model for better speed. Use batch normalization to stabilize learning. Reduce precision to speed up training. Mixed precision can help. Profile your code with PyTorch tools. Identify slow parts and optimize them. Parallelize data loading with DataLoader. Use `num_workers` for this. Use GPU acceleration for faster computation. Ensure your GPU is utilized fully. Adjust learning rates carefully. A good learning rate speeds up convergence.

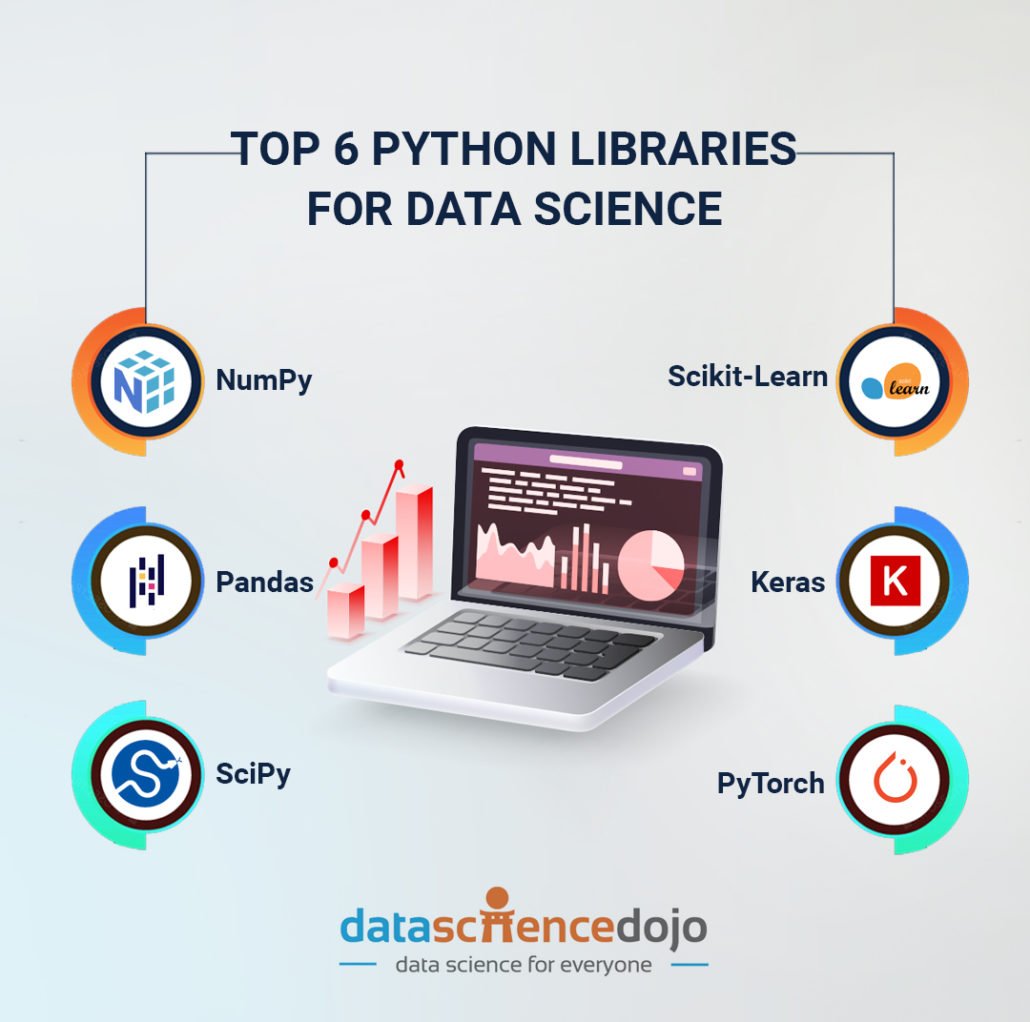

Credit: datasciencedojo.com

Staying Updated With Pytorch

New features in PyTorch come out often. Reading release notes helps you learn about them. Following PyTorch blogs keeps you informed. Joining PyTorch forums can also help. Many experts share their tips there. Watching tutorial videos is another good way. You can find many on YouTube. Attending PyTorch webinars is also useful. These webinars cover new updates in detail.

The PyTorch community is very active. Joining PyTorch groups on social media helps. Participating in online forums can also be beneficial. Reading PyTorch books gives you in-depth knowledge. Using official PyTorch documentation is crucial. It is always up-to-date and detailed. Attending PyTorch meetups is another great way. You can meet other users and learn from them.

Credit: www.appsilon.com

Frequently Asked Questions

Is Pytorch Good For Data Science?

Yes, PyTorch is excellent for data science. It offers dynamic computation graphs, easy debugging, and strong community support.

What Do I Need To Know Before Learning Pytorch?

Understand Python basics and OOP concepts. Familiarize yourself with machine learning fundamentals. Install Anaconda for a smooth setup. Explore PyTorch documentation and tutorials. Practice coding regularly.

Does Tesla Use Pytorch Or Tensorflow?

Tesla primarily uses PyTorch for its AI and machine learning projects. This includes applications in autonomous driving.

How Hard Is Pytorch To Learn?

Learning PyTorch can be challenging for beginners. Its documentation and tutorials are helpful. Prior knowledge of Python and neural networks eases the process. With consistent practice, understanding PyTorch becomes manageable.

Conclusion

Pytorch simplifies data science by offering powerful tools for machine learning. Its flexibility and ease of use make it essential. Whether you’re a beginner or an expert, Pytorch has features to support your projects. Start leveraging Pytorch today to enhance your data science endeavors and achieve better results.