Learning XGBoost can take a few days to a few weeks, depending on your prior experience with machine learning. Beginners might need more time to grasp advanced concepts.

XGBoost, or Extreme Gradient Boosting, is a powerful machine learning algorithm known for its speed and accuracy. It is widely used for structured data and has won numerous machine learning competitions. Mastering XGBoost involves understanding its parameters, tuning techniques, and integration with Python libraries.

Prior knowledge of machine learning fundamentals can significantly speed up the learning process. Numerous online resources, tutorials, and documentation are available to assist learners at different levels. Hands-on practice and experimenting with various datasets are essential for mastering XGBoost effectively.

Introduction To Xgboost

XGBoost is a powerful machine learning library. It stands for eXtreme Gradient Boosting. It is known for speed and performance. Developers use it to handle large datasets. It is popular in competitions like Kaggle. XGBoost can be used for classification and regression tasks. It supports various programming languages. Python and R are common choices. XGBoost is based on decision trees.

XGBoost offers many benefits. It is very fast and efficient. It can handle missing data well. It has built-in cross-validation. XGBoost can be easily tuned for better results. It provides feature importance scores. This helps in understanding the model. It supports parallel processing. This makes it quicker on large datasets. It also has good documentation. This makes learning easier.

Credit: www.amazon.com

Factors Affecting Xgboost Learning Time

Large datasets can take more time to process. Simple data is faster to learn. Complex data requires more calculations. XGBoost handles large data efficiently. Simple data structures speed up learning. Complex data structures slow it down.

Powerful computers speed up learning. More CPU cores can handle more tasks at once. More RAM allows bigger datasets. GPUs can make learning faster. Slow computers take longer to learn. Efficient hardware reduces learning time.

Choosing the right parameters is crucial. Proper tuning speeds up the process. Wrong settings can slow it down. Learning rate affects speed. Tree depth influences calculations. Number of estimators impacts performance. Correct tuning makes learning efficient.

Getting Started With Xgboost

First, you need to install Python. Use the command line to install Python. Next, install XGBoost using pip. Type pip install xgboost in the terminal. Check if the installation is successful. Type import xgboost in Python. If no errors show, installation is complete. XGBoost is now ready to use.

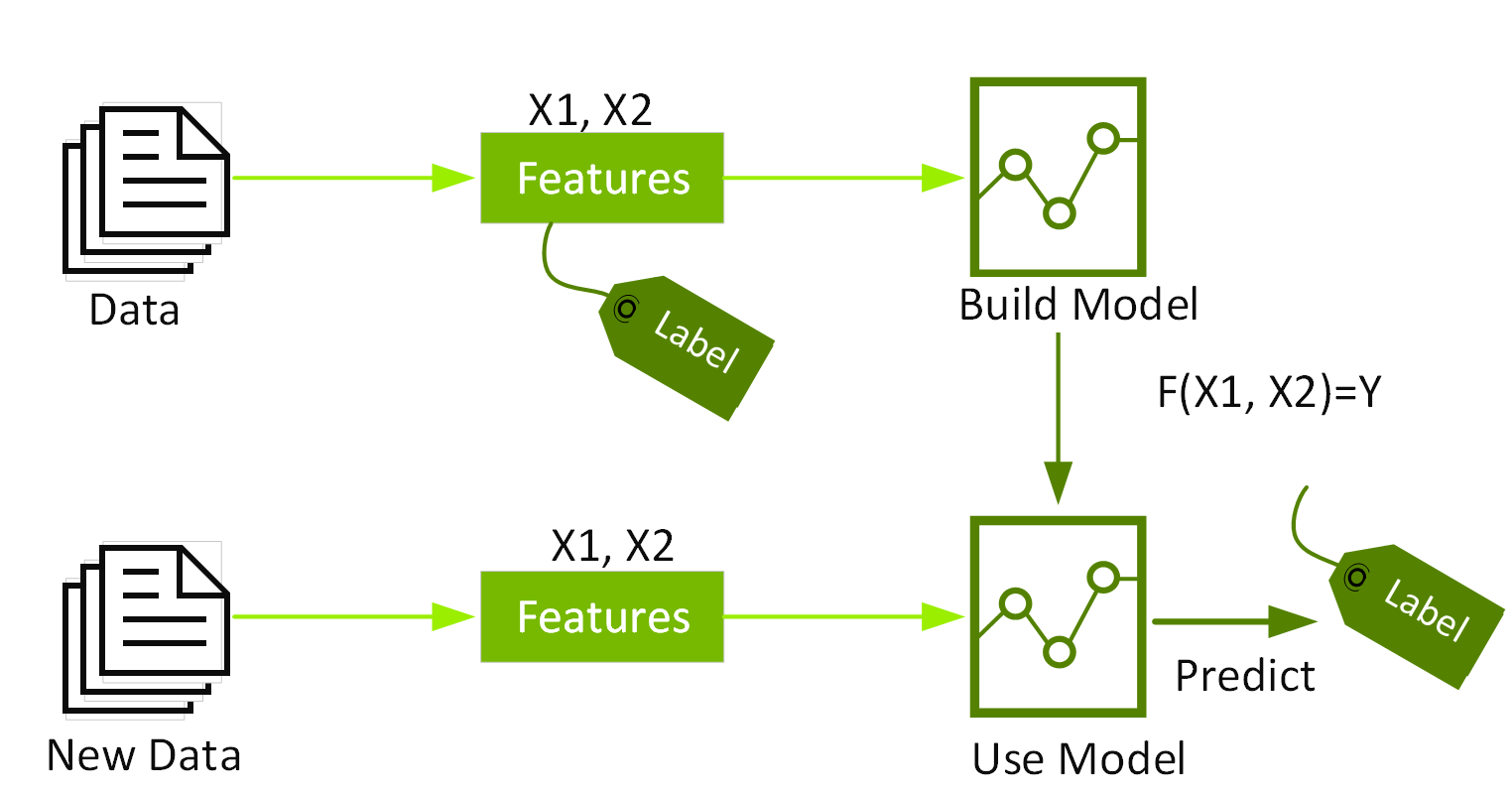

XGBoost stands for Extreme Gradient Boosting. It is a machine learning library. XGBoost is used for supervised learning problems. These include regression and classification tasks. The library is efficient and flexible. It uses gradient boosting techniques.

Understanding decision trees is important. XGBoost builds many small trees. Each tree improves the model’s accuracy. Learning rates control how fast the model learns. Hyperparameters need tuning for best results. Regularization helps prevent overfitting. Practice will make you better at using XGBoost.

Strategies For Efficient Learning

Mastering Xgboost can take a few weeks, depending on your prior experience. Dedicate regular practice sessions for quicker understanding. Utilize tutorials and hands-on projects to enhance learning efficiency.

Focused Practice Projects

Focused practice is key. Start with small projects. Try to build simple models. Use datasets that are easy to understand. This helps in grasping basic concepts quickly. Gradually move to more complex projects. Each project should teach something new. This way, learning becomes engaging and effective.

Online Courses And Tutorials

Online courses are very helpful. Many platforms offer free tutorials. They cover basics to advanced topics. Video lessons are easy to follow. They often include practical exercises. This helps in retaining information better. Forums and discussion groups can also be valuable. They provide support and additional insights.

Optimizing Xgboost Performance

Hyperparameter tuning helps improve XGBoost performance. Grid Search and Random Search are popular techniques. Grid Search tries all possible combinations. Random Search tries random combinations.

Bayesian Optimization is another method. It builds a model to predict the best parameters. Early Stopping stops training when performance stops improving. This saves time and prevents overfitting.

Feature engineering helps create better models. Removing irrelevant features can improve accuracy. Creating new features from existing ones can also help. For example, combining two features into one.

Normalizing data ensures all features have the same scale. This helps the model learn better. Handling missing values is also important. You can fill them with the mean or median.

Common Pitfalls To Avoid

Overfitting happens when the model learns the noise in data. This makes the model perform poorly on new data. Regularization techniques can help prevent overfitting. Cross-validation is another method to check for overfitting. Always use a validation set to evaluate model performance. Monitoring training and validation errors can be helpful.

XGBoost has many hyperparameters to tune. Ignoring these can lead to suboptimal models. Using default settings often misses the best performance. Grid search or random search can find better settings. XGBoost also supports parallel processing. This makes training much faster.

Advanced Xgboost Concepts

Mastering Advanced XGBoost Concepts can take a few weeks to several months, depending on your background in machine learning. Consistent practice and hands-on projects significantly speed up the learning process.

Custom Objective Functions

Custom objective functions allow for greater control over model behavior. These functions help tailor the model to specific needs. One can create these functions to optimize different metrics. This customization can improve model performance significantly. Many users find this feature very beneficial.

Handling Imbalanced Data

Imbalanced data can affect model accuracy. XGBoost offers techniques to handle this issue effectively. One method is to use the scale_pos_weight parameter. This parameter adjusts the weight of positive and negative classes. Another method is to use sampling techniques like SMOTE. These methods help achieve better model performance on imbalanced datasets.

Credit: medium.com

Measuring Mastery And Progress

Learning Xgboost involves using standard datasets. These datasets help measure your progress. One such dataset is the Iris dataset. It is easy to understand and work with. Another popular dataset is the Boston Housing dataset. It is more complex and provides a good challenge.

Kaggle competitions are great for learning Xgboost. They offer real-world problems. You can test your skills and learn from others. Kaggle forums provide valuable insights and tips. Participating in these competitions helps you improve faster.

Credit: www.nvidia.com

Frequently Asked Questions

How Long Does It Take To Train Xgboost?

Training XGBoost can take a few seconds to several hours. The duration depends on data size, complexity, and hardware.

Why Is Xgboost Taking So Long?

XGBoost may take long due to large datasets, complex models, or insufficient computational resources. Optimize parameters and use GPU for faster processing.

Does Xgboost Have A Learning Rate?

Yes, XGBoost has a learning rate parameter. It controls the step size during model training.

Is Xgboost Deep Learning?

No, XGBoost is not deep learning. It is a powerful gradient boosting algorithm used for supervised learning tasks.

Conclusion

Mastering Xgboost requires dedication and consistent practice. The learning duration varies based on individual effort and prior knowledge. By investing time and utilizing available resources, you can efficiently grasp Xgboost. Stay persistent and patient, and soon you’ll excel in this powerful machine learning tool.

Keep learning and growing in your data science journey.