Discover the top Python libraries that will streamline your NLP workflows. Compare NLTK, spaCy, and Gensim features. Boost your productivity and enhance your data science projects. Find your ideal tools!

Python’s extensive library ecosystem makes it a go-to language for data science, especially in natural language processing (NLP). Each of these seven libraries provides unique capabilities that cater to various NLP tasks, from text preprocessing to advanced deep learning applications.

NLTK and SpaCy are great for basic and intermediate tasks, while Gensim is perfect for topic modeling. TextBlob simplifies text processing, and PyTorch and TensorFlow are essential for deep learning. Scikit-learn offers robust tools for machine learning. Mastering these libraries will significantly enhance your data science projects, making them more efficient and effective.

Introduction To Nlp And Data Science

Natural Language Processing (NLP) is a crucial branch of artificial intelligence. It focuses on the interaction between computers and human language. Data Science leverages NLP to extract meaningful insights from text data. Combining NLP with Data Science unlocks numerous opportunities for data analysis and automation.

Importance Of Nlp

NLP helps machines understand human language. This is essential for various applications. Some examples are sentiment analysis, language translation, and chatbots. NLP bridges the gap between human communication and computer understanding. This makes technology more accessible and user-friendly.

Here are some key benefits of NLP:

- Improved Customer Service: Chatbots provide instant support.

- Enhanced Data Analysis: Extract insights from social media.

- Automated Processes: Automate repetitive tasks with NLP algorithms.

Role In Data Science

NLP plays a significant role in Data Science. It helps in analyzing large volumes of text data. Data scientists use NLP to preprocess and clean data. This ensures better quality and accuracy of the analysis.

Common NLP tasks in Data Science include:

- Tokenization: Breaking text into smaller units.

- Named Entity Recognition (NER): Identifying key entities in text.

- Text Classification: Categorizing text into predefined labels.

Data Science and NLP together offer powerful tools. They enable better decision-making and improved business outcomes.

Nltk

Natural Language Toolkit (NLTK) is a powerful Python library. It helps in working with human language data. NLTK is essential for tasks in natural language processing (NLP).

Overview Of Nltk

NLTK is a leading platform for building Python programs. It works with human language data. NLTK offers easy-to-use interfaces. These include over 50 corpora and lexical resources. It also includes a suite of text processing libraries. These help in classification, tokenization, stemming, tagging, parsing, and semantic reasoning. NLTK is ideal for both beginners and experts in NLP.

Key Features

- Corpora and Lexical Resources: Includes popular datasets and lexical tools.

- Text Processing Libraries: Tools for tokenization, stemming, and more.

- Machine Learning: Supports classification and clustering.

- Visualization: Tools for plotting and graphing text data.

- Comprehensive Documentation: Detailed guides and tutorials available.

Use Cases

NLTK is used in various NLP tasks. Below are some common use cases:

- Text Classification: Categorize text into predefined groups.

- Tokenization: Split text into words or sentences.

- Sentiment Analysis: Determine the sentiment of a text.

- Named Entity Recognition (NER): Identify entities in text.

- Parsing: Analyze the grammatical structure of a sentence.

NLTK is a must-have for NLP in data science. It offers robust tools and resources.

Spacy

spaCy is an open-source library for advanced Natural Language Processing (NLP). It is designed to be fast, efficient, and production-ready. spaCy is popular in data science for its powerful features and ease of use.

Overview Of Spacy

spaCy is written in Python and Cython. It offers pre-trained models for multiple languages. The library supports tokenization, part-of-speech tagging, named entity recognition, and more. spaCy is known for its high performance and accuracy.

Key Features

spaCy boasts several key features that make it a must-have library for NLP:

- Tokenization: Efficient and accurate word tokenization.

- Part-of-Speech Tagging: Identifies the grammatical parts of text.

- Named Entity Recognition: Detects proper nouns and labels.

- Lemmatization: Reduces words to their base forms.

- Dependency Parsing: Analyzes grammatical structure of sentences.

- Pre-trained Models: Includes models for multiple languages.

- Speed: Built for high performance and quick processing.

Use Cases

spaCy is versatile and can be used in various NLP applications:

- Text Classification: Categorize texts into predefined categories.

- Information Extraction: Pull out specific information from large texts.

- Sentiment Analysis: Determine the sentiment of a given text.

- Chatbots: Build intelligent and responsive chatbots.

- Machine Translation: Translate text from one language to another.

- Document Summarization: Create concise summaries of long documents.

- Named Entity Recognition: Identify and classify entities in text.

spaCy offers a robust set of tools for NLP, making it essential for data scientists. Its features and versatility make it a top choice for many NLP projects.

Credit: datasciencedojo.com

Gensim

Gensim is a powerful Python library for natural language processing (NLP). It excels in topic modeling and document similarity. This library is highly efficient and widely used in data science. Let’s dive into the specifics of Gensim.

Overview Of Gensim

Gensim stands out for its ability to process large text collections. It was developed by Radim Řehůřek and is open-source. Gensim is designed for unsupervised learning tasks. It is particularly popular for topic modeling and document similarity analysis.

Key Features

- Topic Modeling: Gensim excels at identifying topics in large datasets.

- Document Similarity: Easily find similarities between documents.

- Memory Efficiency: Processes large datasets with minimal memory usage.

- Scalability: Works well with small and large datasets.

- Compatibility: Integrates smoothly with NumPy and SciPy.

Use Cases

Gensim’s versatility makes it suitable for various NLP tasks. Here are some common use cases:

- Topic Modeling: Discover hidden themes in large text collections.

- Document Clustering: Group similar documents together.

- Text Summarization: Generate concise summaries of lengthy texts.

- Information Retrieval: Improve search results by finding related documents.

- Semantic Analysis: Understand the context and meaning of text data.

Gensim is a must-have library for any data scientist working with text data. Its efficiency and scalability make it an excellent choice for NLP tasks.

Transformers By Hugging Face

Transformers by Hugging Face is a groundbreaking library for Natural Language Processing (NLP). It offers powerful tools to handle complex language tasks. This library is a must-have for data scientists working with text data.

Overview Of Transformers

The Transformers library focuses on pre-trained models for NLP. These models can perform tasks like text classification, translation, and summarization. The library supports many languages and is easy to use.

With Transformers, data scientists can quickly deploy state-of-the-art models. The library includes models like BERT, GPT-2, and T5. These models have been trained on vast amounts of data, making them highly accurate.

Key Features

- Pre-trained Models: Access to a variety of pre-trained models.

- Easy Integration: Simple APIs for quick model deployment.

- Multi-language Support: Models available in multiple languages.

- High Accuracy: State-of-the-art performance in NLP tasks.

- Community Support: Large community and extensive documentation.

| Feature | Benefit |

|---|---|

| Pre-trained Models | Save time on training from scratch |

| Easy Integration | Fast implementation in projects |

| Multi-language Support | Work with diverse datasets |

| High Accuracy | Reliable results in various tasks |

| Community Support | Access to help and resources |

Use Cases

Transformers by Hugging Face can be used in various NLP applications. Below are some common use cases:

- Text Classification: Categorize texts into predefined labels.

- Sentiment Analysis: Determine the sentiment of a text.

- Named Entity Recognition: Identify entities like names and dates in text.

- Question Answering: Build systems that answer questions based on text passages.

- Text Summarization: Generate concise summaries of longer texts.

- Language Translation: Translate text from one language to another.

- Text Generation: Create new text based on given prompts.

These use cases highlight the versatility of the Transformers library. It is a powerful tool in any data scientist’s toolkit.

Textblob

TextBlob is a Python library for processing textual data. It provides a simple API for diving into common natural language processing (NLP) tasks. This library is particularly beginner-friendly and offers a range of functionalities.

Overview Of Textblob

TextBlob makes text processing simple and effective. It is built on top of NLTK and Pattern. TextBlob allows you to perform a variety of NLP tasks without extensive coding.

With TextBlob, you can easily perform tasks like part-of-speech tagging, noun phrase extraction, sentiment analysis, classification, translation, and more.

Key Features

| Feature | Description |

|---|---|

| Part-of-Speech Tagging | Identifies the grammatical parts of a sentence. |

| Noun Phrase Extraction | Extracts noun phrases from text. |

| Sentiment Analysis | Determines the sentiment of a given text. |

| Text Translation | Translates text from one language to another. |

| Text Classification | Classifies text into predefined categories. |

Use Cases

TextBlob is versatile and can be used in various applications:

- Sentiment Analysis: Determine the sentiment of customer reviews.

- Text Classification: Classify emails as spam or not spam.

- Language Translation: Translate content for multilingual users.

- Named Entity Recognition: Identify names, dates, and other entities in text.

Here’s a simple example of how to use TextBlob:

from textblob import TextBlob

text = "I love coding in Python!"

blob = TextBlob(text)

# Sentiment Analysis

sentiment = blob.sentiment

print(f'Sentiment: {sentiment}')

# Noun Phrase Extraction

noun_phrases = blob.noun_phrases

print(f'Noun Phrases: {noun_phrases}')

# Text Translation

translation = blob.translate(to='es')

print(f'Translation: {translation}')

TextBlob simplifies NLP tasks with minimal coding, making it accessible for data scientists and developers.

Stanfordnlp

StanfordNLP is a popular Python library for natural language processing (NLP). Developed by Stanford University, it provides tools for text analysis and understanding. It’s widely used in data science for various NLP tasks.

Overview Of Stanfordnlp

StanfordNLP offers a range of tools for NLP. It supports multiple languages and provides accurate results. The library is designed to be user-friendly and easy to integrate.

StanfordNLP includes pre-trained models for various languages. It also provides tools for tokenization, parsing, and more.

Key Features

- Multi-language Support: Supports many languages for NLP tasks.

- Pre-trained Models: Offers pre-trained models for quick implementation.

- Tokenization: Breaks text into meaningful units.

- Part-of-Speech Tagging: Identifies grammatical parts of speech.

- Dependency Parsing: Analyzes grammatical structure of sentences.

Use Cases

StanfordNLP is used in various applications. Here are some common use cases:

- Sentiment Analysis: Determines the sentiment of a text.

- Named Entity Recognition: Identifies entities in text.

- Machine Translation: Translates text between languages.

- Text Summarization: Summarizes large texts into shorter versions.

StanfordNLP is essential for NLP tasks in data science. It offers powerful tools for text analysis and understanding.

Flair

Flair is a powerful Python library for natural language processing (NLP). It offers state-of-the-art NLP models. These models help in various text-based tasks. Flair is known for its simplicity and ease of use.

Overview Of Flair

Flair simplifies complex NLP tasks. It provides pre-trained models for text classification, named entity recognition (NER), and more. Users can easily integrate these models into their projects. Flair’s framework is built on top of PyTorch, ensuring high performance.

Key Features

- Pre-trained Models: Use models trained on large datasets.

- Easy Integration: Simple API for quick implementation.

- Multi-lingual Support: Handles multiple languages effectively.

- Transfer Learning: Fine-tune models for specific tasks.

- Embeddings: Provides word and document embeddings.

Use Cases

| Task | Description | Example |

|---|---|---|

| Text Classification | Classify texts into predefined categories. | Spam detection in emails. |

| Named Entity Recognition (NER) | Identify entities like names and places in text. | Extract names from news articles. |

| Part-of-Speech Tagging | Tag words with their respective parts of speech. | Analyze grammatical structure. |

| Sentiment Analysis | Detect the sentiment of a text. | Analyze customer reviews. |

| Document Embeddings | Convert documents into numerical vectors. | Similarity search in document databases. |

Choosing The Right Library

Choosing the right Python library for Natural Language Processing (NLP) is crucial. The right library can save time and enhance your project’s accuracy. Let’s explore factors to consider and compare popular libraries for NLP in data science.

Factors To Consider

When selecting an NLP library, consider these factors:

- Ease of Use: How easy it is to learn and use the library?

- Documentation: Is the documentation clear and helpful?

- Community Support: Is there a large community for help and resources?

- Performance: How well does the library perform with large datasets?

- Compatibility: Does it work well with other tools and libraries?

- Flexibility: Can it handle different NLP tasks?

- Updates: Is the library regularly updated?

Comparison Of Libraries

Below is a comparison of some popular NLP libraries:

| Library | Ease of Use | Documentation | Community Support | Performance | Compatibility | Flexibility | Updates |

|---|---|---|---|---|---|---|---|

| NLTK | High | Good | Large | Moderate | High | High | Regular |

| spaCy | Moderate | Excellent | Large | High | High | High | Regular |

| Gensim | Moderate | Good | Large | High | High | Moderate | Regular |

| TextBlob | High | Good | Moderate | Moderate | High | Moderate | Regular |

| Transformers | Moderate | Excellent | Large | High | High | High | Regular |

Selecting the right library depends on your project needs. Consider these factors and comparisons to make an informed decision.

Credit: www.geeksforgeeks.org

Credit: www.kdnuggets.com

Frequently Asked Questions

Which Python Libraries Are Used For Nlp?

Popular Python libraries for NLP include NLTK, spaCy, and TextBlob. These libraries offer tools for text processing. Use Gensim for topic modeling and word embeddings. Hugging Face’s Transformers is excellent for advanced NLP tasks.

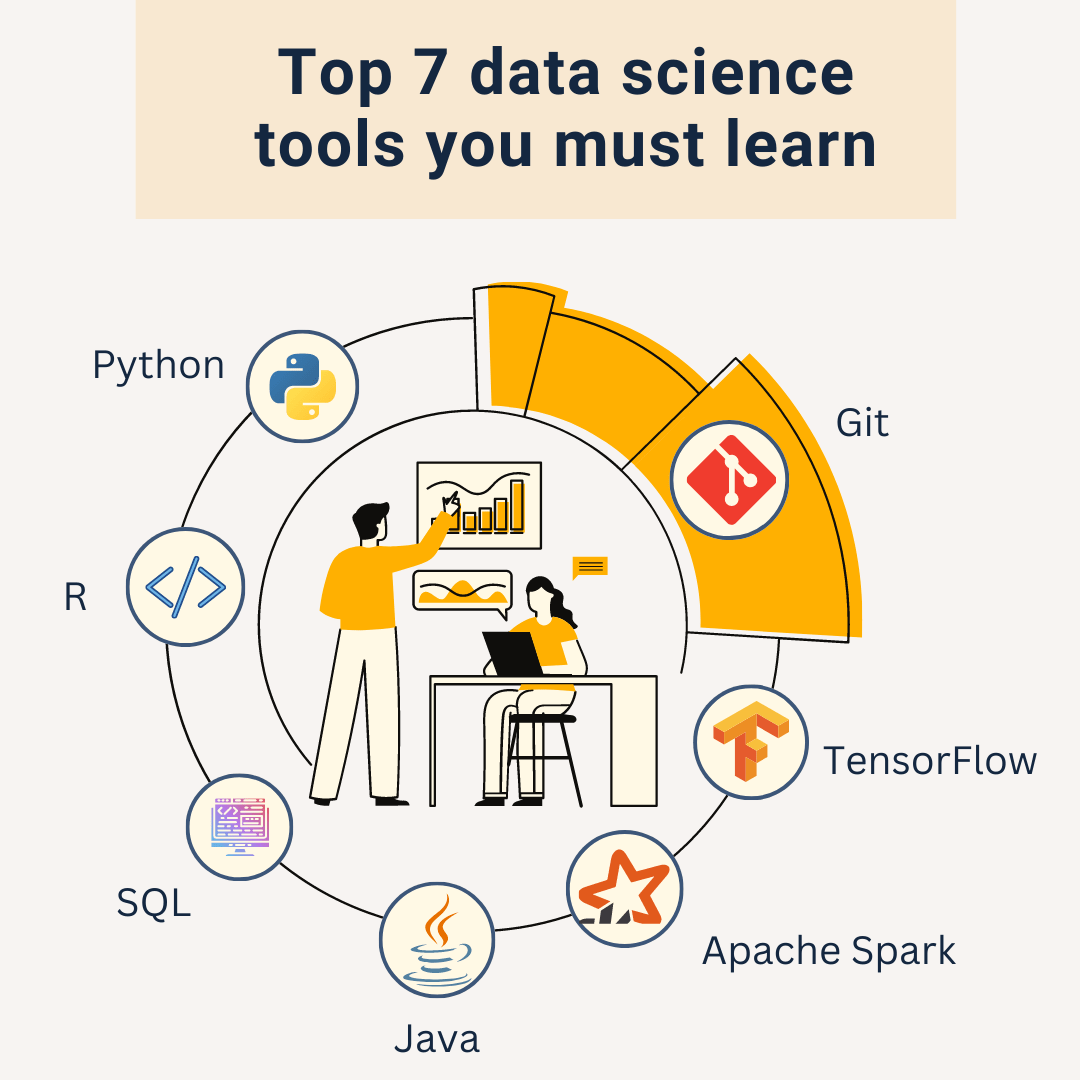

What Python Library Is Required For Data Science?

For data science, essential Python libraries include Pandas, NumPy, Matplotlib, Scikit-learn, and TensorFlow. These tools enable efficient data analysis, visualization, and machine learning tasks.

Which Of The Python Library Are More Popular In Data Science?

Popular Python libraries for data science include Pandas, NumPy, Matplotlib, SciPy, Scikit-learn, TensorFlow, and Keras. These libraries offer robust tools for data manipulation, visualization, and machine learning.

What Are The Five Regression Python Modules That Every Data Scientist Must Know?

The five essential regression Python modules are Scikit-Learn, Statsmodels, TensorFlow, PyTorch, and XGBoost. These modules offer robust tools for data analysis and machine learning.

Conclusion

Mastering these seven Python libraries can significantly boost your NLP projects in data science. Each library offers unique tools and features. Incorporate them into your workflow to enhance efficiency and accuracy. Stay updated and keep experimenting with these libraries to stay ahead in the field.

Happy coding!