Ensure data quality in your science projects with SQL-based cleaning techniques. Learn to handle missing values, remove duplicates, and standardize formats. Improve analysis accuracy. Click to clean your data effectively!

Data cleansing and preprocessing are critical steps in data management. SQL, a powerful language for managing and manipulating databases, excels in these tasks. Through SQL, users can efficiently identify and correct errors, remove duplicates, and standardize data formats. Proper data cleansing ensures data integrity, leading to more reliable analytics and business decisions.

Preprocessing involves transforming raw data into a usable format, enabling more accurate insights. Using SQL for these processes helps maintain data consistency and enhances the overall quality of data analysis. Efficient data management is crucial for businesses to make data-driven decisions and maintain competitive advantage.

Credit: realpython.com

Introduction To Data Cleansing

Clean data is vital for accurate analysis. Mistakes in data can lead to wrong decisions. Clean data improves efficiency and reduces errors. Quality data helps in building reliable models. Clean data ensures better insights and outcomes.

- Missing values cause incomplete analysis.

- Duplicate entries lead to biased results.

- Inconsistent formats create confusion.

- Outliers can skew results.

- Incorrect data misleads analysis.

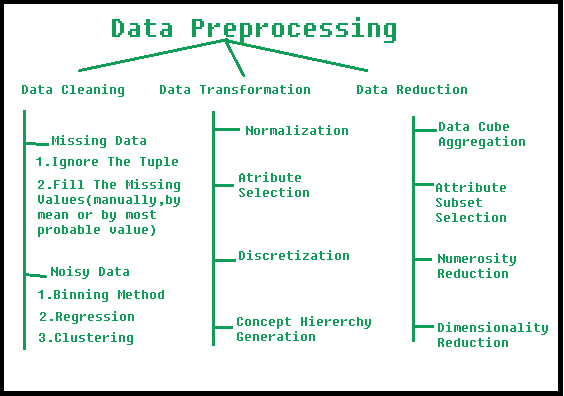

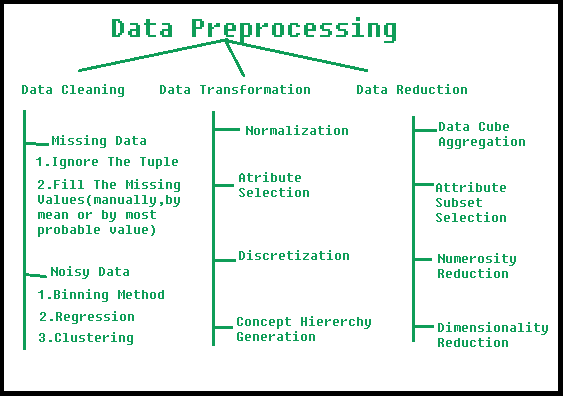

Credit: www.geeksforgeeks.org

Sql Basics For Data Preprocessing

SQL stands for Structured Query Language. It helps to manage and manipulate databases. SELECT is a basic command. It fetches data from tables. INSERT adds new records to tables. UPDATE changes existing records. DELETE removes records from tables. WHERE clause filters data. JOIN combines data from multiple tables.

Database Management Systems or DBMS store and manage data. Examples include MySQL, PostgreSQL, and SQL Server. These systems use SQL to interact with data. They help keep data organized. DBMS also ensure data security and integrity.

Identifying Data Anomalies

Missing values can cause problems. SQL can help find these values. Use the `IS NULL` keyword to detect them. For example, `SELECT FROM table WHERE column IS NULL;`. This command shows rows with missing values.

Duplicate records can distort data. SQL can locate them easily. Use the `GROUP BY` and `HAVING` clauses. For example, `SELECT column, COUNT() FROM table GROUP BY column HAVING COUNT() > 1;`. This command lists duplicate entries.

Data Cleaning Techniques

Null values can cause issues in data analysis. Use the COALESCE function to replace nulls. Another method is using the IS NULL condition. This helps identify records with missing data. Updating these records is crucial. You can use the UPDATE statement for this task. Ensure all necessary fields are filled. This makes data more reliable.

Duplicates can skew analysis results. The DISTINCT keyword helps remove duplicates. Use it in your SELECT statement. This ensures each record is unique. Another method is using the ROW_NUMBER function. This helps identify duplicate rows. Once identified, use the DELETE statement to remove them. Always verify the data after cleaning.

Data Transformation Methods

Normalization is a method to scale data. It changes the range of data to fit within a specific scale, like 0 to 1. This helps in comparing different datasets. Normalization is useful in machine learning algorithms. It improves the performance of models.

Standardization is another method for scaling data. It changes the data to have a mean of 0 and a standard deviation of 1. This method is useful in algorithms that assume normal distribution. Standardization helps in making data uniform and easy to analyze.

Advanced Sql For Data Quality

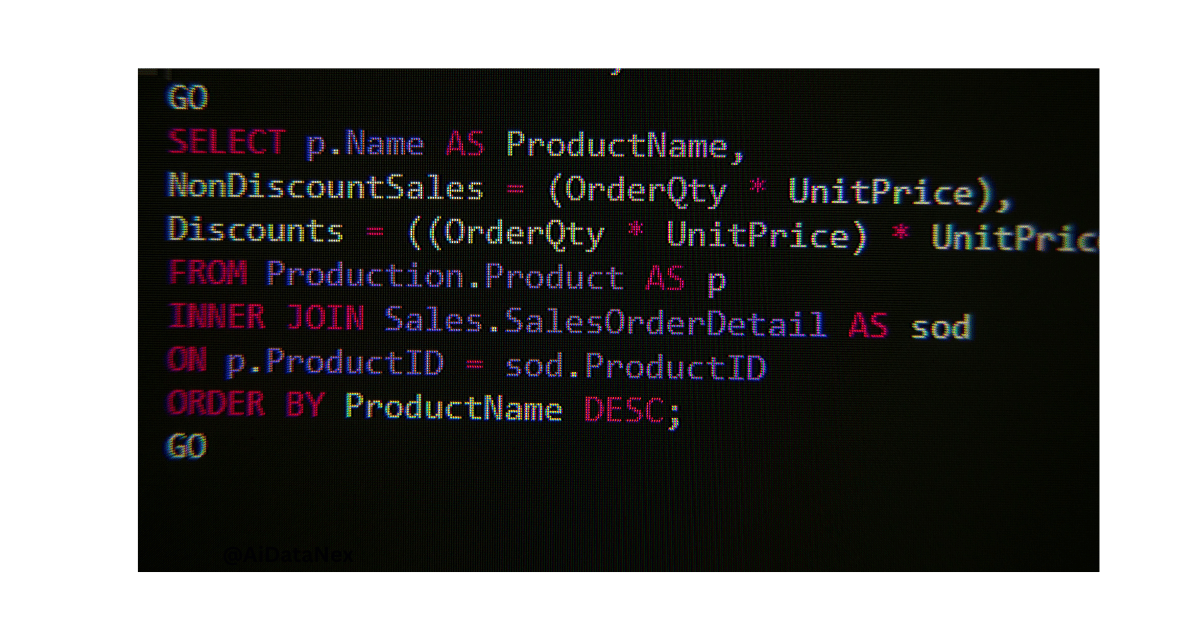

Joins help link tables together. They ensure data consistency. Use INNER JOIN to find matching rows in two tables. Use LEFT JOIN to keep all rows from the left table. RIGHT JOIN keeps all rows from the right table. These joins help maintain data accuracy.

Window functions perform calculations across a set of table rows. They do not group data into a single output row. Use ROW_NUMBER() to assign a unique number to each row. RANK() gives a rank to rows within a partition. DENSE_RANK() ranks rows without gaps in ranking values. These functions help analyze data more effectively.

Automating Data Preprocessing

Stored procedures can streamline your data preprocessing tasks. They allow for reusable code and ensure consistency in data handling. Error handling becomes easier with stored procedures. They can handle data validation, transformation, and aggregation in one place.

Using stored procedures reduces manual intervention. This helps in maintaining data integrity and saves time. Stored procedures can be scheduled to run at specific times. This ensures data is always up-to-date.

Triggers automatically execute predefined actions in response to certain events. They help in maintaining data integrity and consistency. Triggers can automate tasks like data validation, auditing, and synchronization.

Using triggers eliminates the need for manual checks. This reduces human errors and ensures reliable data processing. Triggers can be set to activate on INSERT, UPDATE, or DELETE operations. This ensures data remains consistent across all changes.

Best Practices For Data Management

Regular data audits help find errors in your data. Fixing these errors early saves time later. Always check for duplicates and missing values. Verifying data accuracy is important. This ensures your data is always clean and useful. Data audits should be done monthly or quarterly. Regular checks improve data quality over time. Automated tools can help in data audits. Using these tools makes the process faster. Clean data leads to better decision-making. Always keep your data accurate and up-to-date.

Good documentation explains your data clearly. It helps others understand your data easily. Metadata provides details about data sources and formats. This makes data integration simpler. Always update documentation when data changes. Clear metadata improves data usability. This also helps in data governance. Keep a record of all data changes. Proper documentation saves time in the future. It ensures everyone is on the same page. Well-documented data is more reliable and useful.

Case Studies

One company used SQL to clean their customer data. They had many duplicate entries. They removed the duplicates using unique keys. This made their data cleaner and more useful.

Another example is a retail store. They had missing values in their sales data. They used SQL to fill the gaps. They looked at similar entries to fill the missing parts.

Good data cleansing makes your data more reliable. Clean data helps make better decisions. SQL is a great tool for this job. It can handle large sets of data easily. Always check for duplicates and missing values. These are common problems. Fixing them makes your data better.

Credit: www.kdnuggets.com

Frequently Asked Questions

How To Use Sql To Do Data Cleaning?

Use SQL for data cleaning by removing duplicates, correcting errors, and standardizing formats. Utilize commands like DELETE, UPDATE, and JOIN. Normalize data with TRIM, LOWER, and UPPER functions. Validate data using constraints and create clean, consistent datasets.

How To Do Data Preprocessing In Sql?

To preprocess data in SQL, clean data using `UPDATE` for corrections. Use `DELETE` to remove duplicates. Normalize data with `JOIN` and `GROUP BY` clauses. Transform data types with `CAST` or `CONVERT`. Ensure consistency with `CHECK` constraints.

How To Clean Up A Sql Database?

Clean up a SQL database by removing unused tables, indexing frequently accessed data, deleting duplicate records, and archiving old data. Regularly update and optimize queries. Perform routine maintenance checks to ensure efficiency and reliability.

How To Do Data Cleaning And Preprocessing?

Data cleaning and preprocessing involve removing duplicates, handling missing values, normalizing data, and correcting errors. Use tools like Python, R, and Excel.

Conclusion

Mastering data cleansing and preprocessing using SQL is essential for accurate data analysis. Efficient SQL techniques streamline data workflows. Clean data ensures reliable insights and better decision-making. Invest time in learning SQL for data preparation. Your efforts will pay off with improved data quality and analysis efficiency.